Facial Animation Research

Summer 2000

I was accepted on a student scheme to be funded for research within the Department of Computer Science during the Summer of 2000. As part of this project, I helped to develop a reasoning, expressive, animated virtual assistant. I developed a muscle-based facial animation technique allowing lip-synchronisation with text-to-speech output and facial expressions. I was able to carry on this work as a part of my fourth-year project into augmented reality.

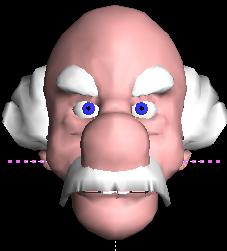

Sample screen-shots of the completed muscle-based model in action

Images (left-to-right):

(a) mesh before deformation,

(b) illustration of mesh lines and defined muscles (red/yellow lines),

(c) Example deformation of the muscles.

Key elements

- Muscle-based models - deforming a polygon mesh with a specified set of 'virtual muscles'.

- Expression - a particular configuration of muscle contraction values.

- Animated expressions - interpolating between muscle contraction values produces a much more realistic effect than the traditional interpolation between mesh positions.

- Lip-synchronisation - using the animated expression technique to move the face between different 'expressions' for each part of the speech (phoneme).